PyData Meetup: Creating practical applications for machine learning

PyData Dublin launched in September 2017 in Workday, playing host to two Google scholars, Dr. Barry Smyth and Dr. Derek Greene.

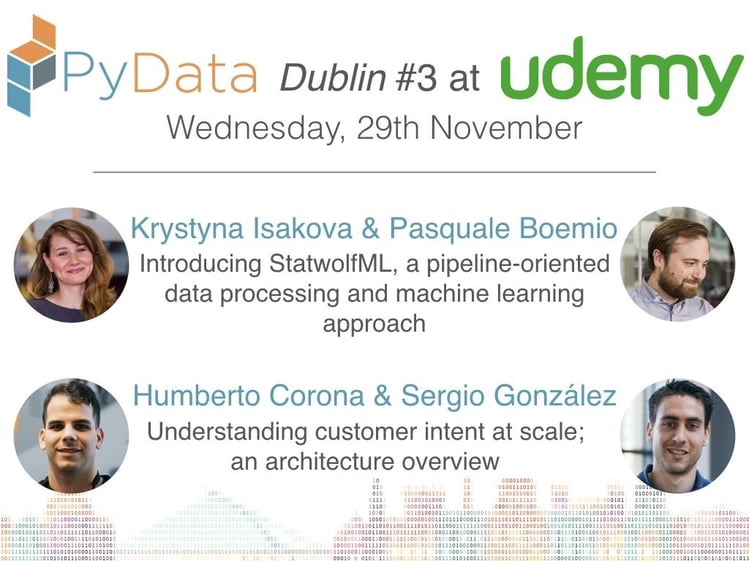

The first of a trio of successful events, Ph.D students and professionals with an interest in data science gathered in notable locations around Dublin: first Workday, then Zalando, and now Udemy, where Statwolf’s Krystyna Isakova and Pasquale Boemio joined Zalando’s Humberto Corona and Sergio González to talk all things data.

On a global scale, the PyData network promotes best practices, new approaches, and knowledge sharing – and namechecks participants from some of the biggest organisations in the world.

Introducing StatwolfML at PyData

PyData’s core mission is to represent applications of data so Krystyna and Pasquale spoke about the intricacies of StatwolfML and how it applies in the real world.

Using JSON, Stawolf’s framework is a wraparound for machine learning. The process happens in three phases:

- The data is obtained.

- The data is cleaned.

- The algorithms are chosen and applied, with output in JSON.

StatwolfML has been developed with declarative syntax, so that no code needs to be written by the user for the framework to work. Likewise, the reproducibility means that each machine learning workflow can easily be stored and retrieved.

In a commercial sense, StatwolfML’s big benefit is the declarative syntax, wherein the user doesn’t need to have the ability to write code. Instead, you essentially ask the framework to interrogate the data and apply relevant algorithms. Statistic techniques are applied to the data and predictions are made with a registered output.

The software is structured with plugin architecture, so it’s easy to add features and extend the interface within the Statwolf framework. Jobs are processed in a job queue within the ecosystem and outputs are stored in the database.

In turn, each workflow is divided into two sets:

- Training: A ‘training data set’ is created from real data. It’s analysed by the framework and predictive models are created.

-

Prediction: The data is cleaned and predictions are made based on the training stage results. Using the previous phase output the preprocess transformations and trained models are applied on the unseen dataset. At the end of these two steps the predictions for each model are collected in a customisable output object.

While the explanation of the technology at play can be quite dense, the entire process is crafted to be as simple as possible for the client, such that they can run jobs without the need for coding.

StatwolfML: commercial appeal

As per Pasquale and Krystyna, Statwolf’s USP is that it’s a machine learning software that’s intuitive and client-orientated in its ease of use.

Indeed, StatwolfML has benefits across the board. For in-house data scientists and analysts, it allows for more efficient analysis.

As the system is built on declarative syntax, the analysts can focus on the algorithm selection and creation stages more so than coding and preliminary statistical analysis. For busy analysts, this can save hours of work – which, in turn, reflects on project spend and hours burned.

Secondly, StatwolfML is easy to implement such that the algorithms, predicitons, and solutions can be up and running with a quick turnaround, leading to time and cost-saving benefits for the business.

Essentially, StatwolfML makes a complex system and architecture much more accessible – and the framework is designed to be adaptable to various use-cases.

In finance, for example, fraud detection has risen to the fore thanks to machine learning software like Statwolf’s. According to CreditDonkey, 46 percent of Americans have been victims of credit card fraud in the past five years. That’s hundreds of millions of people – and billions in fraudulent transactions.

Given the sheer number of daily credit card transactions, a human monitoring system could only ever be flawed. The modern, machine learning approach takes human error out of the equation by using analytical models to examine a bank’s dataset such that it can more readily flag fraudulent transactions – and prevent financial fraud.

Other examples of impacted industries are automotive and healthcare, which have an ever-growing demand for data analysis. According to IBM, demand for data scientists and analysts will spike by 28 percent by 2020. The reasoning is clear: data science is a huge opportunity for businesses, both small and large.

In the automotive industry, a study by the US Department of Energy said that a, “functional predictive maintenance program can reduce maintenance costs by 30 percent, reduce downtime by 45 percent, and eliminate breakdowns by as much as 75 percent.” In healthcare, the market for artificial intelligence is projected to grow by 40 percent a year, to $6.6 billion in 2021.

StatwolfML: A simple study in how it works

Given PyData’s emphasis on real-world applications of data analysis, Krystyna and Pasquale showcased a demo of StatwolfML during the course of their talk by examining the iris dataset.

Using data based on the flower’s physical features, StatwolfML quickly identified the class of each iris flower.

The step-by-step instructions for the model are stored in the input JSON file, and the process goes as follows:

Step 1: Data acquisition

Krystyna and Pasquale took data about the iris flower from a dataset available on the internet and input it into StatwolfML, alongside inputting nan (i.e. not a number) values to test the accountability of the package.

Step 2: Preprocessing

The second step allows the machine learning model to kick in, by cleaning the nan values with different techniques and encoding the iris class variants into numerical values.

Once that’s done, there’s a clean dataset to work with.

Step 3: Modelling

In the modelling step, the relevant classification algorithms are applied to the data. In the case of the iris dataset, Krystyna and Pasquale applied four different classification algorithms.

These algorithms will give a new JSON object, which contains the encoded trained models.

From there, the framework has everything it needs to perform selected prepocessing jobs and make predictions – all within the span of three steps.

While the technology and code at work may seem impenetrable to many people, the varied use-cases and early success mean that machine learning and its associated language will become a part of business vernacular in years to come.

However, it’s the early adopters who will see the biggest wins – so why wait?

Interested in machine learning?

If you want to harness the power of artificial intelligence in your business, Statwolf’s data science service can help, with advanced online data visualisation and analysis simply running in your web browser.

We offer a range of custom services to suit your needs: advanced data analysis and modelling, custom algorithm creation and implementation with a particular focus on Predictive Maintenance.

We’ve helped a host of clients get the most out of their data. Are you going to be next?